Please keep in mind that my first objective was to develop and train the model so I didn’t spent much time on the design aspect of the framework, but I’m working on it (and pull requests are welcome)! Sources Here I describe other results obtained training the same model on MNIST and SFDDD (check below for more infos), an overview of the project and possible future works with it.īelow I describe in brief how I got all of that, the sources I used, the structure of the residual model I trained and the results I obtained.

#DEEP RESIDUAL LEARNING FOR IMAGE RECOGNITION HOW TO#

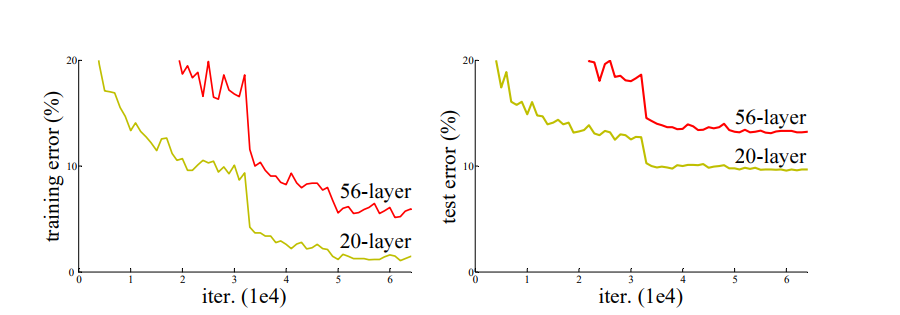

This chapter contains an explanation on how to implement both forward and backward steps for each one of the layers used by the residual model, the residual model’s implementation and some method to test a network before training.Īfter developed the model and a solver to train it, I conducted several experiments with the residual model on CIFAR-10, in this chapter I show how I tested the model and how the behavior of the network changes when one removes the residual paths, applies data-augmenting functions to reduce overfitting or increases the number of the layers, then I show how to foil a trained network using random generated images or images from the dataset.

For now it is available only in Italian, I am working on the english translation but don’t know if and when I’ll got the time to finish it, so I try to describe in brief each chapter.Īn introduction of the topic, the description of the thesis’ structure and a rapid description of the neural networks history from perceptrons to NeoCognitron.Ī description of the fundamental mathematical concepts behind deep learning.Ī description of the main concepts that permitted the goals achieved in the last decade, an introduction of image classification and object localization problems, ILSVRC and the models that obtained best results from 2012 to 2015 in both the tasks. On Monday, June 13rd, I graduated with a master’s degree in computer engineering, presenting a thesis on deep convolutional neural networks for computer vision. Star PyFunt Star deep-residual-networks-pyfunt Star PyDatSet Convolutional Neural Networks for Computer Vision I wanted to implement “Deep Residual Learning for Image Recognition” from scratch with Python for my master’s thesis in computer engineering, I ended up implementing a simple (CPU-only) deep learning framework along with the residual model, and trained it on CIFAR-10, MNIST and SFDDD. I am proud to announce that now you can read this post also on kdnuggets! However, it has been proven that these networks are in essence just Recurrent Neural Network (RNNs) without the explicit time based construction and they’re often compared to Long Short-Term Memory (LSTM), Gated Recurrent Unit (GRU), and Recurrent Neural Network (RNN) without gates.Deep Residual Networks for Image Classification with Python + NumPy

It has been shown that these networks are very effective at learning patterns up to 150 layers deep, much more than the regular 2 to 5 layers one could expect to train. Basically, it adds an identity to the solution, carrying the older input over and serving it freshly to a later layer. Instead of trying to find a solution for mapping some input to some output across say 5 layers, the network is enforced to learn to map some input to some output + some input. Sunĭeep residual networks (DRN) called ResNets, are very deep Feed Forward Neural Networks (FFNNs) with extra connections callled 'skip connections', passing input from one layer to a later layer (often 2 to 5 layers) as well as the next layer.

Deep Residual Learning for Image Recognition | K.Feed Forward Neural Network (FF or FFNN).

0 kommentar(er)

0 kommentar(er)